While the awareness for discrimination biases based on genders or ethnicities has grown a lot in recent years, especially in the context of fairness in Machine Learning, many datasets come with certain biases of which the researcher is not aware and hence uses to train sub-optimal models. Selection Bias identification and mitigation strategies, general enough to be a fixed component of every Machine Learning pipeline, are crucial to enable the researcher to improve the data quality by mitigating the bias instead of training a biased model.

Our research focuses on developing new algorithms that identify or mitigate bias in data sets and Machine Learning models. We are also interested in the impact of bias in Machine Learning models on real-world applications and limitations caused by bias.

Joerg Simon Wicker

Katharina Dost

Pat Riddle

Katerina Taskova

Activities

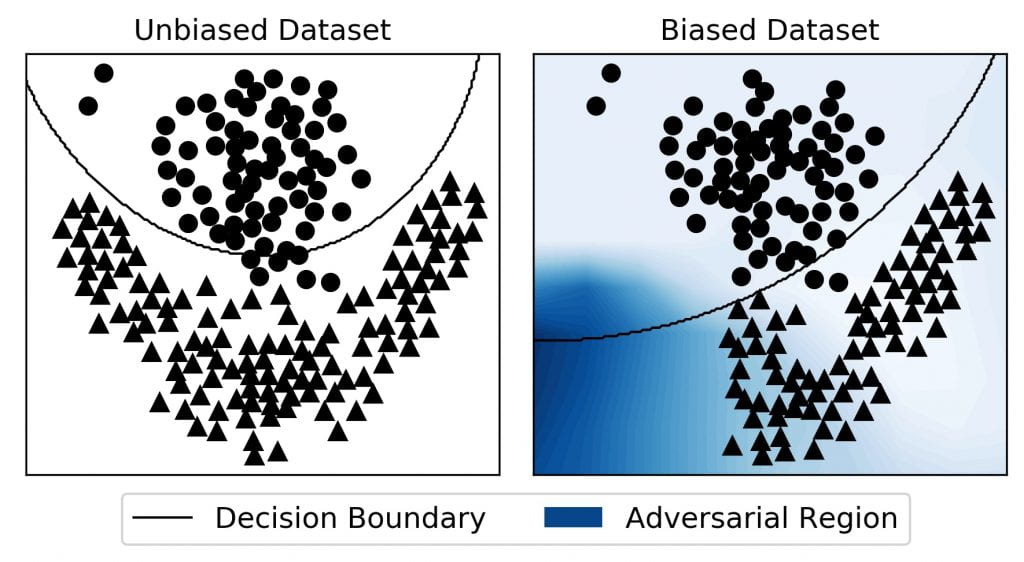

Summer Project: Auditing Machine Learning Models – Quantifying Reliability using Adversarial Regions

Summary We aim to design and develop new methods to attack machine learning models and identify the lack of reliability, for example related to bias in the data and model. These issues can cause problems in various applications, caused by weak performances of...

ML Student Seminar Apr 22 2021: Domain Adaptation and Bias Mitigation for Regional Varieties of Languages

Speaker: Annie Lu, PhD student, supervised by Yun Sing Koh, and Joerg Wicker Abstract: Regional varieties of languages such as dialects have proved to have different syntactic and semantic features in the linguistics discipline. However, these dialects have low...

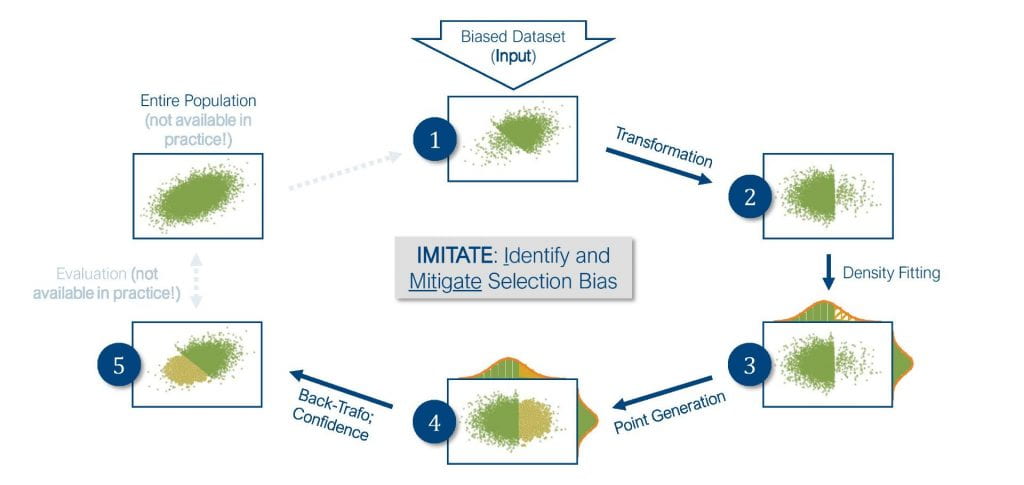

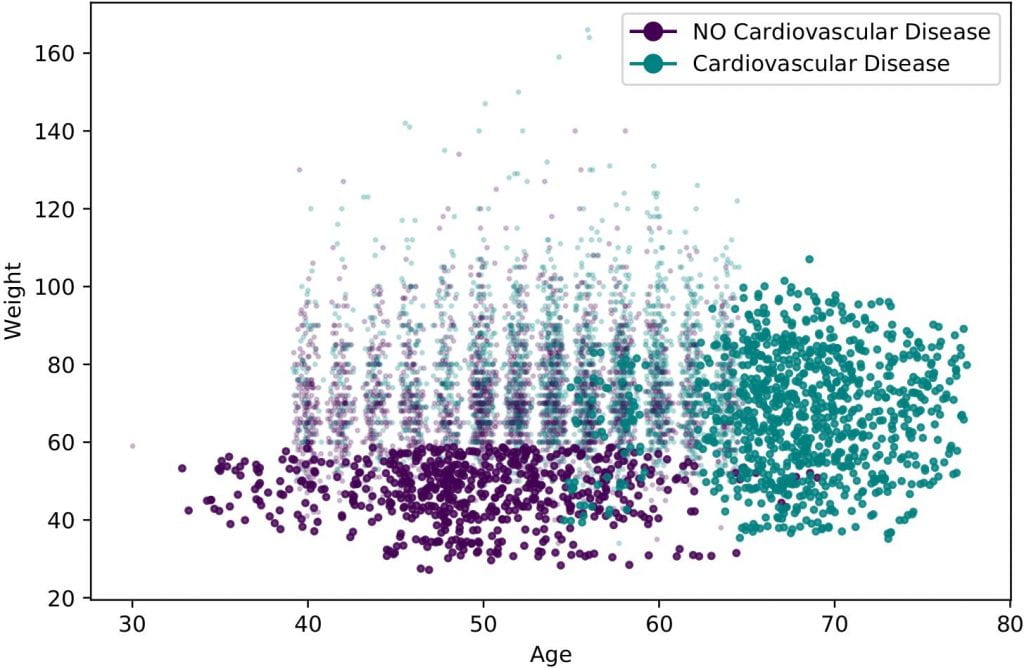

Identification and Mitigation of Selection Bias

Kia Ora! I am Katharina Dost, a PhD student in my second year with the School of Computer Science. My research topic is “Identification and Mitigation of Selection Bias” and I would like to use this post to talk about my research and my experiences, so read on! Our...