I am Beryl Qi and my research topic is ‘Knowledge-Driven Text Generation’. Natural language generation (NLG) is an important research direction in the field of natural language processing. This technology can be applied to various information processing tasks, such as QA (Question Answering), IE (Information Extraction), PD (Problem Decomposition), etc. The wide application of NLG benefits from its learning and processing capabilities when facing to explosion of data.

I am Beryl Qi and my research topic is ‘Knowledge-Driven Text Generation’. Natural language generation (NLG) is an important research direction in the field of natural language processing. This technology can be applied to various information processing tasks, such as QA (Question Answering), IE (Information Extraction), PD (Problem Decomposition), etc. The wide application of NLG benefits from its learning and processing capabilities when facing to explosion of data.

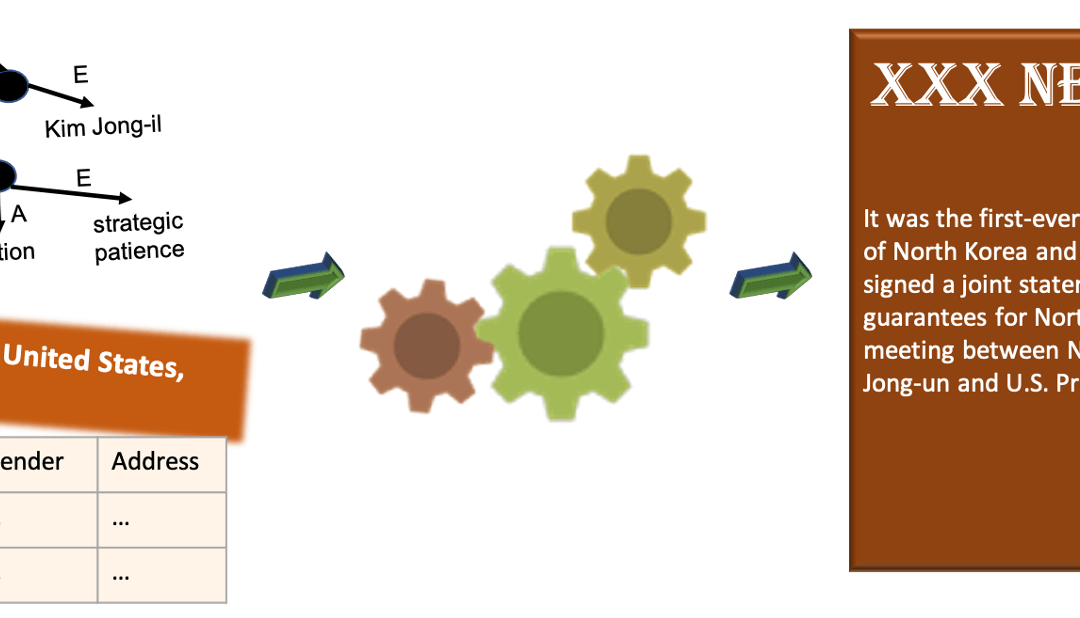

The overall system we plan to produce is a multi-input system which includes a keyword-extraction module, sentence iterative generation module, text-frame discourse updater and text generator. The planned text-frame updater is a semantic discourse module which updates a frame with continuously arriving inputs, including those from previous iterations. For the text generator, we propose to implement a VAE model to control the output according to a latent representation of text structure. Understanding this model and implementing it as part of a text generation system is another task.

Some preliminary experiments have already been conducted to test the feasibility of using GPT-2 as the foundation model for conditioned text generation tasks. The initial experimental results show that GPT-2 can be fine-tuned to adapt to downstream tasks. We used low-frequency words as keywords in our initial experiment, but in the future we may use other keywords extraction methods.

University of Auckland provides me a flexible and resourceful environment. Normally I stay in my office for study which is convenient for me to discuss the encountered problem with other PhD students or professors in Computer Science department. It is always better to talk to someone else when you face an issue. Work with some outstanding person save me much time.

The research topic, knowledge-driven text generation, I am working on is what I believe will be useful and popular in the near future. At the same time, many other people are doing research on natural language processing. Hence, I enjoy the time chasing the most cutting-edge technologies, and collaborating with smart people in this research area.

Most of PhD students in computer science that I know studies assiduously and perseveringly. I feel happy to be able to study together with them. Besides, I hope to improve my working efficiency. Although time accumulation is one important factor, in this fast-growing research area, propose applicable systems, get it work properly and analyze the experiment results quickly is more important. I hope my research result will promote evolution in natural language processing field after some time.

The biggest challenge for many post graduate student is applying our research outcome into practice. While I am studying in the university, I also work for a company which is developing language models to help students lift writing outcomes. It is a good opportunity for me to implement what I learned into practical usage.

If I have the chance to collaborate across the University, I would like to work together with student from mathematic department. It is because we need a mess of mathematical derivation theory to support the proposed language model. Other than math department, business school is another good choice for me to collaborate. Many evidences show that business management is a fresh and broad market for natural language processing technology to march in.

The advice that I want to tell younger students is choosing a topic you are interested in and prepare to work hard. Balance your mind in many heavy tasks and train your own time management skills. In summary, choose an interesting research topic, choose a responsible supervisor, choose a suitable study environment.