Traditional Simultaneous Localization and Mapping (SLAM) has relied on feature descriptors for robustly attaining localization and mapping. Recent advancement in Convolutional Neural Networks (CNN) have allowed object instances to be used instead of feature descriptors in SLAM.

Loop closure detection has been an essential tool of SLAM to minimize drift in its localization. Many state-of-the-art loop closure detection (LCD) algorithms use visual Bag-of-Words (vBoW), which is robust against partial occlusions in a scene but cannot perceive the semantics or spatial relationships between feature points. CNN object extraction can address those issues, by providing semantic labels and spatial relationships between objects in a scene, and previous work has mainly focused on replacing vBoW with CNN derived features.

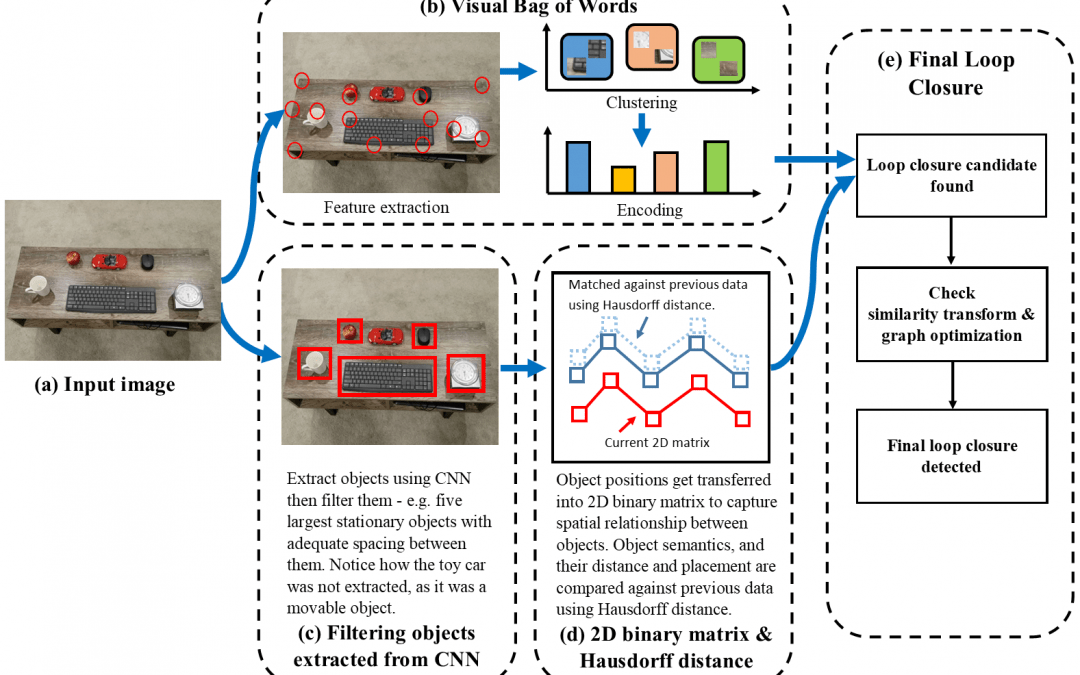

In contrast to prior work, we propose the SymbioSLAM framework with a novel dual loop closure detection (D-LCD) component that utilizes both CNN extracted objects and vBoW. When used in tandem, the added elements of object semantics and spatial-awareness creates a complementary loop closure detection system which is more robust. The proposed D-LCD component uses a simple and elegant object filtering method that mimics the human brain, matrix decomposition for scale-invariant semantic matching, and a novel use of Hausdorff distance with temporal constraints for finding loop closure candidates.

Whereas the proposed SymbioSLAM framework with D-LCD is already suitable for many different datasets, it needs further improvements and extensions to work well in a more general setting. This PhD aims to extend SymbioSLAM to a wide set of situations and clearly identify its limitations.