AI Reading Group

The AI Reading Group hosts bi-weekly reading groups where members present and discuss papers on topics in broad categories, such as AI ethics, machine learning, natural language processing, selection bias and computer vision. Come and join us in the discussion of ideas and seminal papers in AI Research to understand current developments and debates in the field!

The AI Reading Group will be held bi-weekly (alternating with ML Student Seminars) on Thursday 2-3pm in 303S-561.

Papers

Papers will be selected by alternating members of the group and the paper schedule will be announced two weeks in advance. We then encourage everyone to read the paper before joining the session. During each session, members will be discussing strengths/weaknesses/impact/novelty of the paper.

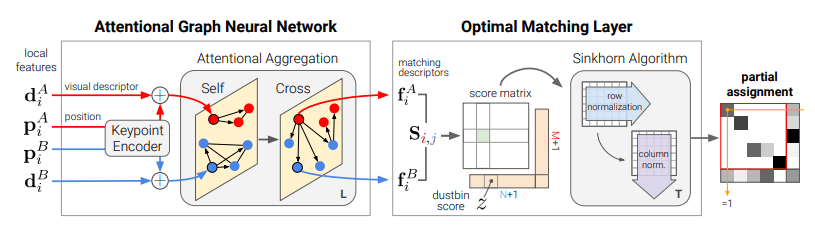

AI Reading Group on June 24 2021: SuperGlue: Learning Feature Matching with Graph Neural Networks

Where and when: Thursday, June 24 at 2-3pm in 303S-561 Abstract This paper introduces SuperGlue, a neural network that matches two sets of local features by jointly finding correspondences and rejecting non-matchable points. Assignments are estimated by solving a differentiable optimal transport problem, whose costs are predicted by a graph neural network. We introduce a flexible context aggregation mechanism based on attention, enabling SuperGlue to reason about the underlying 3D scene and...

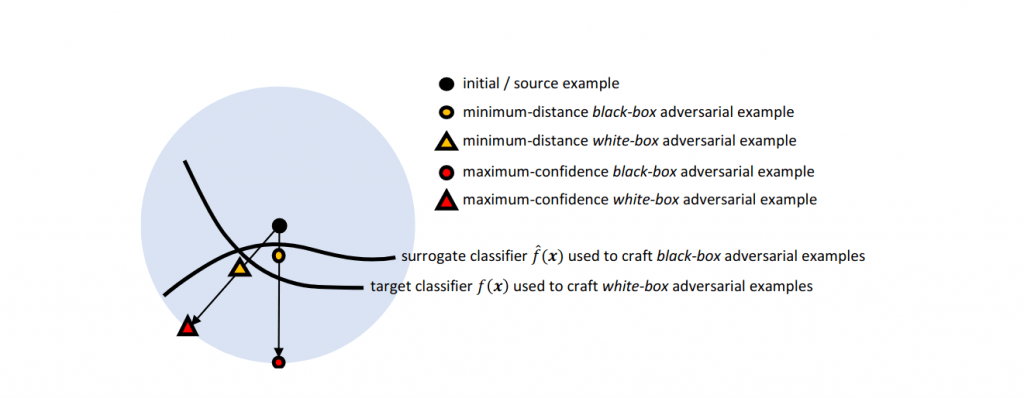

AI Reading Group 06/10/21: Why Do Adversarial Attacks Transfer? Explaining Transferability of Evasion and Poisoning Attacks

Where and when: Thursday, June 10 at 2-3pm in 303S-561 Transferability captures the ability of an attack against a machine-learning model to be effective against a different, potentially unknown, model. Empirical evidence for transferability has been shown in previous work, but the underlying reasons why an attack transfers or not are not yet well understood. In this paper, we present a comprehensive analysis aimed to investigate the transferability of both test-time evasion and...

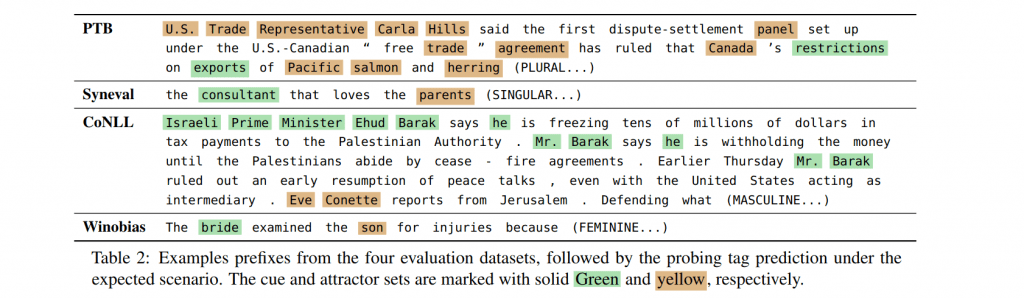

AI Reading Group 05/27/21: Evaluating Saliency Methods for Neural Language Models

Saliency methods are widely used to interpret neural network predictions, but different variants of saliency methods often disagree even on the interpretations of the same prediction made by the same model. In these cases, how do we identify when are these interpretations trustworthy enough to be used in analyses? To address this question, we conduct a comprehensive and quantitative evaluation of saliency methods on a fundamental category of NLP models: neural language models. We evaluate...

Organisers

Jonathan Kim