Kia Ora! I am Luke Chang and I am passionate about building more reliable machine learning models, an artificial intelligence people can trust.

Kia Ora! I am Luke Chang and I am passionate about building more reliable machine learning models, an artificial intelligence people can trust.

I started my machine learning journey by designing a transmission controller for an engine in my third-year undergraduate project. I was fascinated by how artificial intelligence can be integrated into everyday systems. When I learnt a state-of-the-art machine learning model is vulnerable to malicious attacks, even when trained on a large amount of data, I made my decision to contribute to solving this problem.

Can you imagine the consequences of a self-driving vehicle wrongly identifying a stop sign as a speed limit sign? The attacker can deceive the computer just by adding minor changes to the stop sign. This type of attack is called an adversarial attack. This example highlights the potential threat of adversarial attacks. So far, there is no perfect solution for defending against adversarial examples, and there is no machine learning model which is immune to attacks.

My research is focusing on understanding why and how this behaviour exists. After investigating the relationship between adversarial examples and classification models, I noticed most of the adversarial examples exist in three regions in the hyper-space. First, as an outlier, where an unconstrained input is not compliant with the intended use case of the model. Second, it appears close to the decision boundary of the model. Third, it exists in a “pocket” formed by the decision boundary, which means the attack is disguised as the model is overfitting the training data.

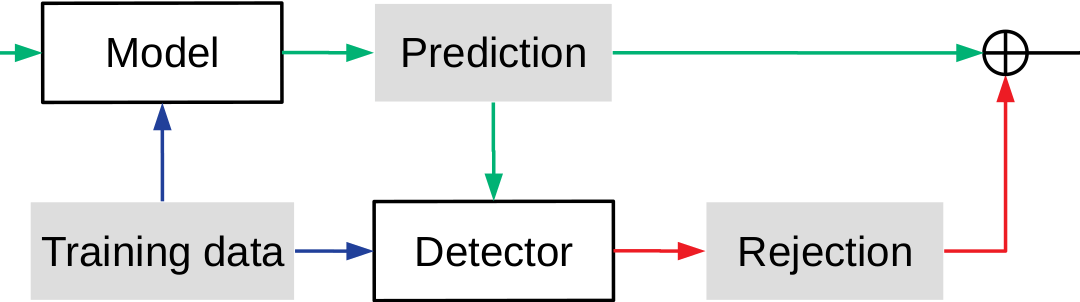

I developed a novel multi-stage defensive framework to check these characteristics. This framework shows promising results on a wide range of machine learning models and different types of data. My goal is to make machine learning models more resilient to attacks, and I believe my research will have a significant impact on people’s daily life.

Building a reproducible experiment isn’t easy, it becomes harder when there are many moving parts involved. Preprocessing the data, choosing the best machine learning model, executing attacks to break the model, and then finding a way to block the most vicious attack. I encountered a complexity I have never seen before. It’s challenging, but I think it is very rewarding.

To my younger self: If it’s too easy to achieve, then it’s probably not worth pursuing. Just don’t give up.