Short Bio

Zachary Chase Lipton is the BP Junior Chair Assistant Professor of Operations Research and Machine Learning at Carnegie Mellon University and a Visiting Scientist at Amazon AI. He directs the Approximately Correct Machine Intelligence (ACMI) lab, whose research spans core machine learning methods, applications to clinical medicine and natural language processing, and the impact of automation on social systems. Current research focuses include, robustness under distribution shift, decision-making, applications of causal thinking to practical high-dimensional settings that resist stylized causal models, and AI ethics. He is the founder of the Approximately Correct blog (approximatelycorrect.com) and a co-author of Dive Into Deep Learning, an interactive open-source book drafted entirely through Jupyter notebooks. He can be found on Twitter (@zacharylipton), GitHub (@zackchase), or his lab’s website (acmilab.org).

Abstract

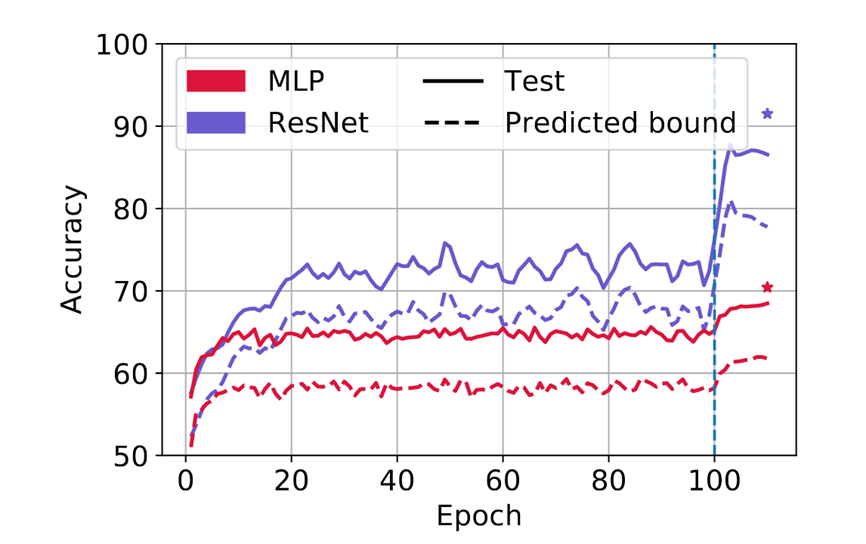

To assess generalization, machine learning scientists typically either (i) bound the generalization gap and then (after training) plug in the empirical risk to obtain a bound on the true risk; or (ii) validate empirically on holdout data. However, (i) typically yields vacuous guarantees for overparameterized models. Furthermore, (ii) shrinks the training set and its guarantee erodes with each re-use of the holdout set. In this paper, we introduce a method that leverages unlabeled data to produce generalization bounds. After augmenting our (labeled) training set with randomly labeled fresh examples, we train in the standard fashion. Whenever classifiers achieve low error on clean data and high error on noisy data, our bound provides a tight upper bound on the true risk. We prove that our bound is valid for 0-1 empirical risk minimization and with linear classifiers trained by gradient descent. Our approach is especially useful in conjunction with deep learning due to the early learning phenomenon whereby networks fit true labels before noisy labels but requires one intuitive assumption. Empirically, on canonical computer vision and NLP tasks, our bound provides non-vacuous generalization guarantees that track actual performance closely. This work provides practitioners with an option for certifying the generalization of deep nets even when unseen labeled data is unavailable and provides theoretical insights into the relationship between random label noise and generalization.

RSVP

If you want to join this seminar, please leave your name and email below, you will get the Zoom link once you click submit.