Speaker: Qiming Bao, PhD student, supervised by Jiamou Liu and Michael Witbrock

Speaker: Qiming Bao, PhD student, supervised by Jiamou Liu and Michael Witbrock

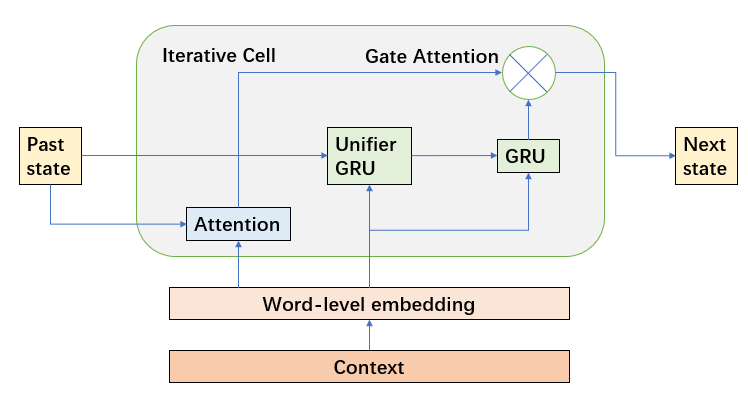

Abstract: Combining deep learning with symbolic reasoning aims to capitalize on the success of both fields and is drawing increasing attention. However, it is yet unknown how much symbolic reasoning can be acquired through the means of end-to-end neural networks. In this paper, we explore the possibility of migrating a neural-based symbolic reasoner to a neural-based natural-language reasoner, in the hope of performing natural-language soft reasoning tasks. It is noted that natural language is represented using a high-dimensional vector space, and reasoning is performed using an iterative memory neural network based on RNN and an attention mechanism. We use three open-source natural-language soft reasoning datasets PARARULE, CONCEPTRULE, and CONCEPTRULE Version 2. Our model has been trained in an end-to-end manner to learn whether a logical context contains a given query. We find that an iterative memory neural network that converts the character-level embedding to word-level embedding can achieve high test accuracy (86%) on the PARARULE dataset and higher than 90% test accuracy on the CONCEPTRULE and CONCEPTRULE Version 2 dataset without relying on a pre-trained language model.